Artificial intelligence is here. But are we ready for it?

Although the term artificial intelligence was coined and introduced by the American computing pioneer John McCarthy as early as 1956, it is the past decade that has made it omnipresent. Today, hardly a day goes by without hearing, reading, or discussing it. The decisive factor in its definitive breakthrough into the everyday lives of ordinary personal computer users has undoubtedly been the fourth edition of the ChatGPT application. Its simplicity and accessibility have ensured the widespread adoption of a technology that had previously existed predominantly in technical and scientific environments. Now, it has erupted into our daily lives, and it seems unlikely that we will ever do without it again.

However, few people consider the crucial questions that AI presents us with-both technical and technological, as well as ethical dilemmas. Even fewer users understand what these systems entail and the challenges they bring. Humanity has never encountered anything comparable, making the situation akin to an encounter with extraterrestrial intelligence.

What are we actually talking about when we talk about artificial intelligence? The European Parliament defines it as follows: "Artificial intelligence is the ability of a machine to imitate human capabilities such as logical reasoning, learning, planning, and creativity." (European Parliament Topics, 2020). In colloquial terms, the term artificial intelligence is used for systems that mimic human cognitive processes-learning and problem-solving capabilities. Learning primarily requires a sufficient amount of data, meaning sources on which conclusions can be drawn. The same principle applies to artificial intelligence.

For AI systems to function, they require a vast amount of data and patterns, which they process to learn and make decisions and/or generate responses. In the case of the aforementioned ChatGPT, the majority of the necessary dataset consists of all publicly available texts published on the internet up to a certain point. By leveraging these texts and data, the system generates new texts or answers users' questions, all based on previously processed patterns-information or data that the system has previously used for learning.

Here, we encounter the biggest challenge that AI systems present. The processing of an immense volume of data necessitates exceptionally high-performance computational infrastructures capable of handling vast datasets within extremely short timeframes. Recent years have witnessed a rapid evolution of specialized IT architectures, featuring unprecedented processing capabilities once deemed inconceivable. However, such high computational efficiency-combined with extensive storage requirements-inevitably results in substantial energy consumption, which in turn leads to considerable thermal output.

In other words, systems that enable AI operation are designed differently from conventional ITC systems. While they operate on similar foundations, they offer significantly greater processing and communication capacities and substantially larger data storage capabilities. Consequently, they impose vastly different and higher requirements for the environment where they are deployed. And this brings us to the next challenge. It quickly becomes evident that the existing infrastructure and ITC equipment are inadequate, as they do not support the installation of high-performance systems required for AI operation. Although most AI systems currently operate in dedicated data centers, administrators and users rightfully expect that they will soon migrate to smaller centers, to end-users.

New challenges

AI systems will soon no longer operate solely as large language models based on information relevant to a broad user base. They will increasingly be integrated into specialized environments, such as the business sector, including small and medium-sized enterprises. Applications such as electricity consumption forecasting and optimization, logistics, traffic congestion and peak prediction, field analysis, market trend forecasting, and customer behavior modeling … The potential for integrating AI into business operation of small or medium businesses is limitless. The fundamental advantage of AI lies in its capacity to process vast amounts of data and extract meaningful insights, enabling decision-making and the optimization of procedures and workflows at a speed far surpassing human capabilities. Consequently, AI adoption will become indispensable for businesses seeking to maintain or enhance their competitive standing. This necessitates the rapid deployment of AI infrastructure and systems in the data centers of small-scale users and enterprises. However, the critical question remains: are they prepared for this transition? The answer is unequivocal-they are not.

To adequately prepare for this transition, it is essential to first understand the scope of the challenge. One critical factor is system load. Historically, the average power consumption of system cabinets in data centers has ranged between 5 and 10 kW per cabinet. This indicates that each cabinet typically housed ITC equipment with a total connected power of 5 to 10 kW, with occasional instances reaching up to 20 kW. The majority of this equipment relied on air cooling, maintaining operational temperatures between 22 and 24 degrees Celsius. As previously discussed, AI systems utilize highly advanced processors and other computational components, resulting in significantly higher power consumption and, consequently, a substantial thermal burden on data centers. For instance, consider the Nvidia DGX B200 system, which integrates eight Nvidia Blackwell GPU processors along with the necessary peripheral components. The electrical and thermal power consumption of such a system is approximately 14 kW, and up to four units can be housed within a single system cabinet. This results in a total electrical and thermal load of up to 56 kW per cabinet-an intensity that is entirely unmanageable using conventional cooling methods. Even greater challenges arise with other AI systems, which, due to their extraordinarily high power demands, predominantly rely on water cooling. These systems can require between 130 and 400 kW per cabinet, with projections suggesting that power requirements may escalate to 1 MW per cabinet in the near future.

Therefore, two key conclusions emerge. First, existing data centers are not ready for the installation of systems that support and enable AI. Second, despite the viability of cloud-based solutions, many administrators and users will soon confront the necessity of establishing their own AI infrastructure. Time is of the essence. The rapid expansion of AI, which we are currently witnessing, underscores the urgency of devising strategies to upgrade existing data centers to meet these evolving demands. Furthermore, a forward-thinking approach must be adopted to design future-ready data centers that can seamlessly adapt to the accelerating advancements in AI, ensuring the full realization of its transformative potential.

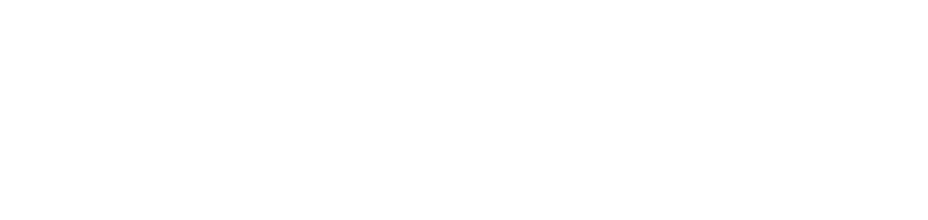

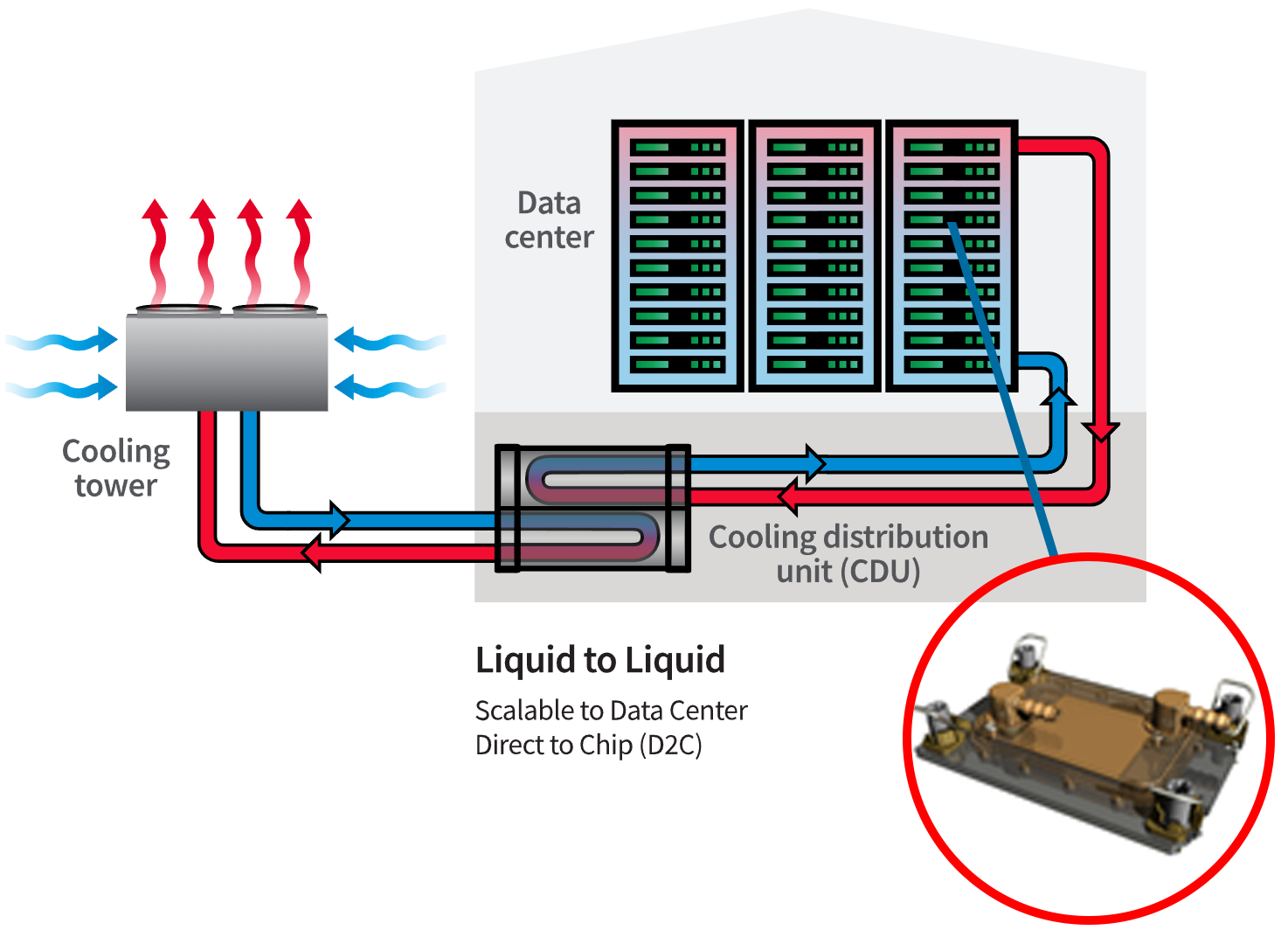

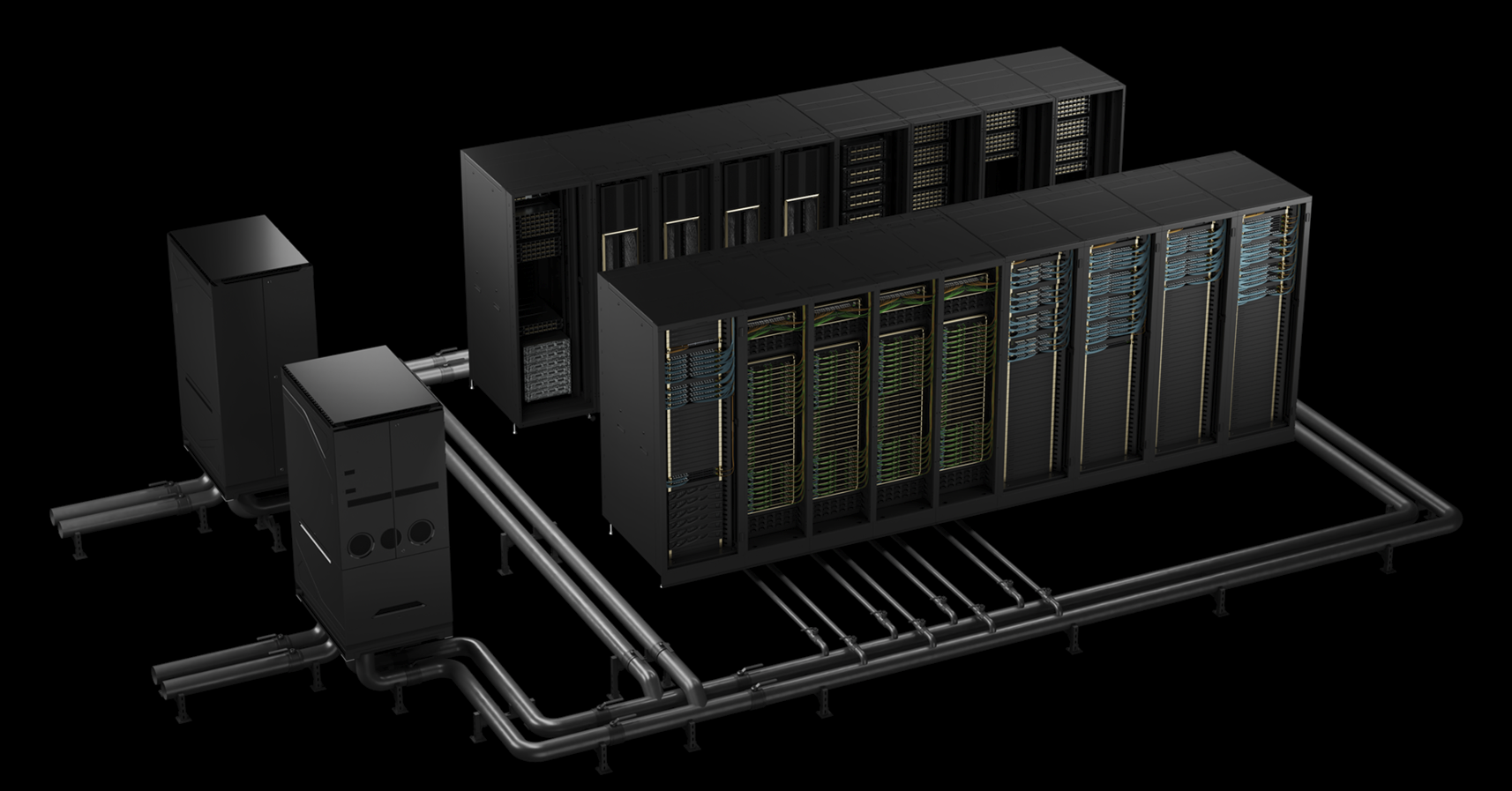

A new paradigm is emerging in data infrastructure—the hybrid data center, designed to accommodate both conventional ITC systems with low power density and AI-driven systems with significantly higher power demands simultaneously. While air cooling remains a component of these facilities, the increasing density of AI workloads will soon exceed the capabilities of traditional cooling methods. This necessitates a fundamental shift in design philosophy, alongside the adoption of technologies and principles that, until recently, seemed unomaginable. Foremost among these is direct liquid cooling, which must be recognized as an imperative.

Hybrid centers will feature pre-installed piping networks for technical cooling systems, allowing for the seamless integration of new systems. Liquid cooling offers unparalleled energy efficiency, as emerging thermal management techniques will enable nearly year-round free cooling through compressor-free systems. A typical water-cooled system operates within a thermal range of 35 to 45 degrees Celsius, optimizing both performance and energy consumption.

Preparing for hybrid data centers

To effectively prepare for the imminent wave of emerging technologies, a fundamental shift towards greater openness and adaptability is required. Addressing key questions, such as how to proceed, what our core strengths are, and which systems should be maintained on-premises versus deployed in the cloud, is imperative. To help with the process, the following eight strategic steps should be undertaken:

- Prepare for the transition to liquid cooling for ICT infrastructure, taking into account the unprecedented temperature regimes of the cooling medium.

- Overcome resistance to surpassing existing paradigms and embrace technological advancements.

- Adapt power sources and comprehensive energy supply systems to meet the evolving demands of AI.

- Redesign the architecture of system cabinets and associated equipment to facilitate the integration of next-generation AI systems and ensure optimal operating conditions.

- Evaluate alternative energy and cooling supply infrastructures and align them with the requirements of new workloads.

- Plan for a scalable infrastructure that can dynamically adjust to future demands.

- Align operational support services with the unique challenges of AI-powered infrastructures.

- Revise and modernize the complete business approach to reflect the shifting landscape of hybrid data centers.

Along with other challenges, considerations, and discussions surrounding artificial intelligence, these steps will be the focal point of the NTRsync 2025 conference (March 26, Maribor, Slovenia). The event has attracted some of Europe's most esteemed IT and AI experts and leading global providers of advanced AI-driven solutions, offering attendees insights into cutting-edge, cost-efficient strategies for seamlessly integrating AI into their IT environments. Additionally, attendees will have the exclusive opportunity to tour one of Slovenia's first hybrid data centers featuring the Vega supercomputer. This guided tour, led by experts who played a key role in its design and deployment, will provide a firsthand view of AI-ready infrastructure's real-world functionality.

SOURCE: Teme Evropskega parlamenta, 2020; spletni portal Evropskega parlamenta:

https://www.europarl.europa.eu/topics/sl/article/20200827STO85804/kaj-je-umetna-inteligenca-in-kako-se-uporablja-v-praksi